Red Hat OpenShift Pipelines is a component of Red Hat OpenShift Container Platform built on top of the Tekton Pipelines project, which is an open source framework. OpenShift Pipelines provides a way to create and manage CI/CD pipelines natively within OpenShift.

When using OpenShift Pipelines, it's not uncommon to see exited containers on worker nodes after running pipeline tasks. This behavior is often a result of how the OpenShift pipeline executes tasks within its pipelines. Each task in an OpenShift pipeline runs in a separate pod, and these pods are typically ephemeral, which means they complete (terminate) when the task completes, which this will be translated by an exited container on the worker node. This is a fundamental design choice in Tekton in order to achieve isolation and reproducibility. This approach ensures that if one task encounters an issue or behaves unexpectedly, it won't have any adverse effects on the other tasks in the pipeline.

However, the existence of terminated pods may result in an accumulation of exited containers on the worker nodes, potentially leading to increased disk consumption within the cluster, as shown below:

sh-5.1# cd /var/log/pods/pipelines-fqnz5-build-image-pod_584de7f8-c3be-497b-9661-3449df067fd7

sh-5.1# du -h

4.0K ./prepare

4.0K ./place-scripts

0 ./working-dir-initializer

12K ./step-build-and-pushFurthermore, this accumulation can saturate the gRPC communication between the container runtime and the kubelet, resulting in unintended side effects on the worker nodes, potentially causing them to become unresponsive.

To maximize the efficient use of physical resources in Red Hat OpenShift Pipelines, a pruner component is employed to automatically clear out obsolete TaskRun and PipelineRun objects along with their terminated pods across different namespaces. This frees up these resources for active runs, ensuring optimal resource allocation.

On the other hand, customers might need to keep history of their PipelineRun and TaskRun for troubleshooting purposes. In this case, pruning PipelineRun and TaskRun is not feasible. Here, the Tekton Results feature comes into play.

Tekton Results is designed to assist users in organizing pipeline workload history in a logical manner and relocating long-term results to a persistent storage.

In this article, we will show how to prune PipelineRun and TaskRun at the cluster level as well as namespace level. In addition, we will showcase the Tekton Results feature and its capabilities.

Prerequisites

The following prerequisites must be satisfied before beginning this process:

- OpenShift cluster 4.15 or later.

- Red Hat OpenShift Pipeline operator v1.16

Auto pruning

Inactive TaskRun and PipelineRun objects, along with their related executed instances, can consume valuable physical resources that might otherwise support ongoing active runs. To optimize resource utilization within Red Hat OpenShift Pipelines, the platform features a pruner component that automatically identifies and removes unused objects and their instances.

For configuration, you have the option to set parameters for the pruner at a cluster level by utilizing the TektonConfig custom resource. This allows you to define broad settings that apply to the entire user cluster namespaces. Additionally, you can fine-tune the pruner's behavior for specific namespaces through the use of namespace annotations, enabling tailored management based on your operational needs.

However, it's crucial to understand that the pruner does not allow for selective auto-pruning of individual TaskRun or PipelineRun instances within a namespace. This means that while you can manage resource cleanup at a higher level or for entire namespaces, granular control over specific runs is not supported in this automated process.

Cluster scope

In order to enable cluster level pruning for TaskRun and PipelineRun, users can modify the default pruner configuration from the TektonConfig object which is created automatically when the operator is installed. Starting with Red Hat OpenShift Pipelines 1.6, auto-pruning is enabled by default with the following configuration:

apiVersion: operator.tekton.dev/v1alpha1

kind: TektonConfig

metadata:

name: config

# ...

spec:

pruner:

disabled: false

keep: 100

resources:

- pipelinerun

schedule: 0 8 * * *

# …In the following example, we modify the default configuration by adding TaskRun as a resource to be pruned. Additionally, we reduce the number of retained TaskRun and PipelineRun objects from 100 to 5 and adjust the pruner's execution interval to every 3 minutes. See below:

apiVersion: operator.tekton.dev/v1alpha1

kind: TektonConfig

metadata:

name: config

# ...

spec:

pruner:

Resources: # The resource types to which the pruner applies

- taskrun

- pipelinerun

keep: 5 #The number of recent resources to keep

schedule: "*/3 * * * *"

# …Upon examining the log of the tekton-resource-pruner job in the openshift-pipelines namespace, we observe as shown below that the pruner iterates through all user namespaces, deleting TaskRun and PipelineRun resources. In our example, it retains only the five most recent TaskRun and PipelineRun objects, starting the deletion process with the oldest resources in the list. See below:

$ tkn taskrun delete --keep=5 --namespace=redhat-ods-monitoring --force

All but 5 TaskRuns(Completed) deleted in namespace "redhat-ods-monitoring"

$ tkn pipelinerun delete --keep=5 --namespace=redhat-ods-operator --force

All but 5 PipelineRuns(Completed) deleted in namespace "redhat-ods-operator"

$ tkn taskrun delete --keep=5 --namespace=redhat-ods-operator --force

All but 5 TaskRuns(Completed) deleted in namespace "redhat-ods-operator"

$ tkn pipelinerun delete --keep=5 --namespace=rhods-notebooks --force

All but 5 PipelineRuns(Completed) deleted in namespace "rhods-notebooks"

$ tkn taskrun delete --keep=5 --namespace=rhods-notebooks --force

All but 5 TaskRuns(Completed) deleted in namespace "rhods-notebooks"Namespace scope

To configure automatic pruning of TaskRun and PipelineRun in specific namespaces, you can apply annotations at the namespace level. This configuration applies to the entire namespace, meaning individual TaskRun and PipelineRun objects cannot be selectively pruned.

In the example below, automatic pruning is enabled for both TaskRun and PipelineRun in the pipeline-tutorial namespace, retaining the latest two objects for each:

…

kind: Namespace

apiVersion: v1

#...

spec:

annotations:

operator.tekton.dev/prune.resources: "taskrun, pipelinerun"

operator.tekton.dev/prune.keep: "2"

…Adding annotations at the namespace level allows you to override the default TektonConfig for the specified namespaces as shown in the log of the tekton-resource-pruner job in the openshift-pipelines namespace below:

$ tkn pipelinerun delete --keep=2 --namespace=pipelines-tutorial --force

All but 2 PipelineRuns(Completed) deleted in namespace "pipelines-tutorial"

$ tkn taskrun delete --keep=2 --namespace=pipelines-tutorial --force

All but 2 TaskRuns(Completed) deleted in namespace "pipelines-tutorial"Tekton Results

Tekton Results enhances the overall usability and efficiency of Tekton Pipelines by providing users with a structured way to manage and store the historical results of their pipelines. It ensures a clear separation between the core pipeline control logic and the long-term storage of results. Note that at the time of writing, Tekton Results is still in tech preview with the latest stable version being 1.16.

Tekton Results archives PipelineRun and TaskRun executions as results and records. In addition, it preserves this manifest after the TaskRun or PipelineRun CR is deleted and makes it available for viewing and searching. For each completed PipelineRun or TaskRun custom resource (CR), Tekton Results generates a corresponding record. Figure 1 illustrates this.

Tekton Results uses a PostgreSQL database to store pipelines metadata and integrate with different persistent storage for the log retention. You can configure the installation to use either a PostgreSQL server that is automatically installed with Tekton Results or an external PostgreSQL server that already exists in your deployment.

More details on how to use Tekton Results in order to query for results and records can be found here.

Furthermore, Tekton Results provides a dashboard that is well integrated into the OpenShift console under the pipeline section, as shown in Figure 2.

Tekton Results in action

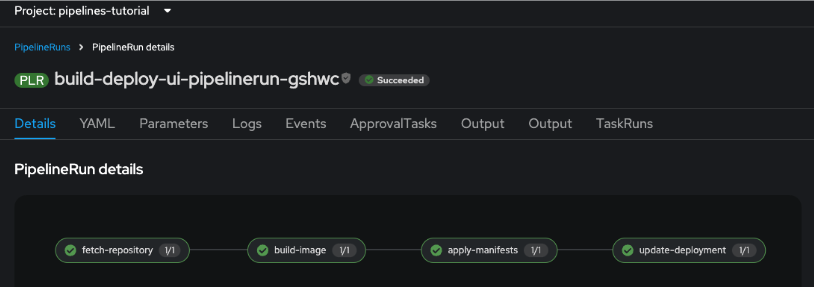

In order to demonstrate the usage of Tekton Results, we begin by creating a pipeline and executing it via the PipelineRun shown below (Figure 3). Later we will delete the PipelineRun in order to showcase how Tekton Results preserves the container logs created by the PipelineRun. To reproduce the creation of the pipeline you can use this link.

At the end of the pipeline execution we notice, by looking inside PostgreSQL, the creation of the following databases:

postgres: This is the default database created by PostgreSQL. It’s often used for administrative tasks.tekton-results: This appears to be a database set up for the Tekton Results service, which is used to track the history, logs, and status of executed Tekton Pipelines.

See below:

sh-4.4$ psql -U result -l

List of databases

Name | Owner | Encoding | Collate | Ctype

-------------------+-----------+----------+---------------+--------------

postgres | postgres | UTF8 | en_US.utf8 | en_US.utf8

tekton-results | result | UTF8 | en_US.utf8 | en_US.utf8After a successful connection to the tekton-results database we proceed to list the tables as shown below. We can distinguish the creation of records and results tables. The records table is used to store pointers to the detailed logs and historical data related to individual TaskRuns and PipelineRuns. In addition, each record includes the full YAML manifest of the TaskRun or PipelineRun CR. The results table is used to store the overall status and outcome of pipeline executions, such as the success or failure of a pipeline, the start and end time of a run, and other high-level metadata. Each result in the table points to one or several records. See below:

sh-4.4$ psql -h tekton-results-postgres-service.openshift-pipelines.svc.cluster.local -p 5432 -d tekton-results -U result

tekton-results=> \c tekton-results

You are now connected to database "tekton-results" as user "result".

tekton-results=> \dt

List of relations

Schema | Name | Type | Owner

--------+---------+-------+--------

public | records | table | result

public | results | table | resultIn order to list the results that correspond to PipelineRun and TaskRun in a specific namespace we execute the following command:

$ opc results list --insecure --addr ${RESULTS_API} pipelines-tutorial

Name Start Update

pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1 2024-09-12 15:39:59 -0400 EDT 2024-09-12 15:42:01 -0400 EDTTo list the records that are linked to the result above we execute the following command:

$ opc results records list --addr ${RESULTS_API} pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1The output of the command represents the container logs created by the execution of the pipeline and also the YAML manifests for the PipelineRun and the TaskRun(s). The output lists four container logs, four TaskRuns, and one PipelineRun, which correspond to what we have executed:

Name Type Start Update

pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1/records/4ce2a58a-74b2-3685-a260-11052f39e066 results.tekton.dev/v1alpha2.Log 2024-09-12 15:41:46 -0400 EDT 2024-09-12 15:41:46 -0400 EDT

pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1/records/aa9b1e89-e60d-3b97-91ac-8b3ab78a561c results.tekton.dev/v1alpha2.Log 2024-09-12 15:42:01 -0400 EDT 2024-09-12 15:42:01 -0400 EDT

pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1/records/d23b3e4f-53f9-4dbd-9e97-cbd7ba9b4bcc tekton.dev/v1beta1.TaskRun 2024-09-12 15:41:40 -0400 EDT 2024-09-12 15:41:46 -0400 EDT

pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1/records/0de8cca2-a1db-4740-ac25-3327bd4711d1 tekton.dev/v1beta1.PipelineRun 2024-09-12 15:39:59 -0400 EDT 2024-09-12 15:42:01 -0400 EDT

pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1/records/7ac07d33-448a-36bd-8173-5489993cb4b1 results.tekton.dev/v1alpha2.Log 2024-09-12 15:42:01 -0400 EDT 2024-09-12 15:42:01 -0400 EDT

pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1/records/dc117797-46a6-45e9-ae2f-eed8d843c8eb tekton.dev/v1beta1.TaskRun 2024-09-12 15:39:59 -0400 EDT 2024-09-12 15:40:36 -0400 EDT

pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1/records/4b90d45f-e1d7-4e1f-9080-a2d27b73ba9e tekton.dev/v1beta1.TaskRun 2024-09-12 15:41:46 -0400 EDT 2024-09-12 15:42:01 -0400 EDT

pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1/records/ccf96c3f-ab43-43b0-b979-8d931d557e0c tekton.dev/v1beta1.TaskRun 2024-09-12 15:40:36 -0400 EDT 2024-09-12 15:41:40 -0400 EDT

pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1/records/13062eab-f9a9-35ff-8d6c-38c9957cd2ea results.tekton.dev/v1alpha2.Log 2024-09-12 15:40:36 -0400 EDT 2024-09-12 15:40:36 -0400 EDTFigure 4 shows the container logs that was created by the execution of the “apply-manifest” TaskRun.

In order to make sure that the containers logs (created by each TaskRun of our PipelineRun) are preserved, we will delete the PipelineRun by executing the following command:

$ oc delete pipelinerun build-deploy-ui-pipelinerun-gshwcNow that we have deleted the PipelineRun let’s try to visualize the container logs created by the “apply-manifest” TaskRun by executing the following command:

$ opc results logs get --insecure --addr ${RESULTS_API} pipelines-tutorial/results/0de8cca2-a1db-4740-ac25-3327bd4711d1/logs/4ce2a58a-74b2-3685-a260-11052f39e066 | jq -r .data | base64 -dThe result of the command shows the exact same logs that we had before the deletion of the PipelineRun. Hence, we can confirm that Tekton Results preserves the container logs in the Persistent Volume Claim (PVC) created during Tekton Results deployment. See below:

[prepare] 2024/09/12 19:41:40 Entrypoint initialization

[apply] Applying manifests in k8s directory

[apply] deployment.apps/pipelines-vote-ui created

[apply] route.route.openshift.io/pipelines-vote-ui created

[apply] service/pipelines-vote-ui created

[apply] -----------------------------------Since Tekton Results comes also with a dashboard, we can easily check the status of the deleted PipelineRun, its duration, and the time of execution. See Figure 5.

Conclusion

Leveraging Tekton Results alongside the pruner component in OpenShift Pipelines provides an effective approach to managing pipeline history and cluster resources efficiently. While the pruner clears out completed TaskRun(s) and PipelineRun(s) to reduce disk usage and enhance performance, Tekton Results archive critical logs and metadata, enabling long-term visibility and traceability. This complementarity allows teams to maintain a clean, responsive environment without sacrificing the historical data necessary for troubleshooting and audits.

While in this article we have chosen to use a PVC as a storage solution for Tekton Results, be aware that Red Hat offers other storage solutions that integrate well with Tekton Results. For example, Google Cloud Storage and S3 bucket storage are both options. Alternatively, if you are using the OpenShift logging solution based on Loki, you can configure forwarding of the logging information to LokiStack. This option provides better scalability for higher loads.