The edge is often a remote, unpleasant place where tasks must continue running autonomously even under adverse conditions, and typically where there is no on-site personnel to take action on such critical systems. Thus, automating workflows at the edge is one of the most crucial activities for organizations aiming to reduce latency, maintain robust security standards, enhance overall efficiency, and maintain operations in remote environments where manual intervention is not possible.

In this article, I will demonstrate how to enable pipelines within Red Hat OpenShift AI (RHOAI) running on a single-node OpenShift instance. We will walk through deploying the pipeline server and creating the necessary pipeline definitions to fully automate the machine learning (ML) and data science workflow.

The workflow automation solution

The solution to these issues lies in task automation. OpenShift AI implements Kubeflow Pipelines 2.0. A pipeline is a workflow definition consisting of steps, inputs, and output artifacts stored in S3-compatible storage. These pipelines allow organizations to standardize and automate machine learning workflows that might include the following steps:

- Data extraction: Retrieving and collecting data automatically from sensors or databases is a vital step before training models.

- Data processing: This consists of manipulating and transforming the data into a format that can be easily interpreted by the model.

- Model training: With pipelines we can inject the data into the model and trigger the training process automatically.

- Model validation: Comparing the performance of the new model against the previous one to ensure improvements.

- Model saving: When the model is trained and validated, the last step will be automating the process of storing it in an s3 bucket.

Create a new s3 bucket

The pipeline server requires an S3-compatible storage solution to store artifacts generated during pipeline execution. In my environment, I already have a MinIO bucket for storing the data, but we will create a separate one specifically for the pipelines. You can deploy MinIO in your cluster by applying these resources. Figure 1 illustrates the following steps:

- Access the MinIO UI page and log in using your credentials.

- On the left-hand menu bar, click on Buckets to list the existing buckets.

- Then select Create Bucket.

- Specify the desired Bucket Name. I will use

pipelines. - Click on the Create Bucket button.

Now that the bucket is ready to be consumed, let’s continue with the creation of the pipeline server.

Configure a pipeline server

The pipeline server instance creates the necessary pods to manage and execute data science pipelines. When we create the server, a new DataSciencePipelineApplication custom resource is created in your OpenShift cluster and provides an API endpoint and a database for storing metadata.

- Open your Data Science Project in Red Hat OpenShift AI.

- In your project dashboard, navigate to the Pipelines tab.

- Before we can start using pipelines, we need the server. Click on Configure pipeline server.

- Here we need to create a new object storage connection that uses our new bucket. Complete the fields with your S3 data:

- Access key: Use your MinIO username. Usually, the most common is

minio. - Secret key: Enter the password for the previous user–in my case:

minio123. - Endpoint: Specify the MinIO API endpoint. My route is:

http://minio-service.minio.svc.cluster.local:9000. - Region: The default value is

us-east-1, but it’s not really used. - Bucket: Indicate the pipelines bucket name created in the previous step. Ours will be

pipelines.

- Access key: Use your MinIO username. Usually, the most common is

- Once all fields are filled, scroll down and click Configure pipeline server.

The pipeline server deployment will begin, creating different components such as a MariaDB database where the pipeline results will be stored. Wait until the process is complete. Keep in mind that it might take a few minutes to finish. Once ready, the Import pipeline option should appear (see Figure 2).

Once the server is configured, it's time to dive into the world of automation. Let's create and run our first pipeline.

Automating with pipelines

Red Hat OpenShift AI offers the opportunity to import an already existing YAML file containing the pipeline’s code or to create a new one within JupyterLab, using the Elyra extension. Elyra provides a visual editor to visually create pipelines based on Notebook or Python files.

Let's use this second approach:

- In your Red Hat OpenShift AI dashboard, click on the Data Science Project tab in the left menu and select the project you are working on.

- In your project page, select Workbenches and create/open your workbench. This workbench needs to have a DataConnection attached to store the dataset that will be generated in the following steps.

- Import the contents of this GitHub repository. It contains the notebooks needed to generate the sample data and the code for each node of the pipeline.

- Navigate to the pipelines folder.

- Open the 0.Transform_dataset.ipynb notebook and run it in order to store the sample data into the data bucket in MinIO. This way we can simulate extracting the data from a database.

- In the Launcher menu, locate the Pipeline Editor item under the Elyra section, as shown in Figure 3.

- This new dashboard can be used to import and create pipelines, but first we need to configure the runtime. In the upper-right corner click on the Open Panel icon (see Figure 4):

- Scroll down in the panel until you reach the Generic Node Defaults section and set the Runtime Image field as Pytorch with CUDA and Python 3.11 (UBI9).

- Click the Close Panel icon again.

- At this point, we can start importing the notebooks and chaining them to create pipelines. We are going to use the notebooks available in the pipelines folder within this Git repository, which you should have previously cloned in your environment.

- Start by dragging the Collect_data.ipynb notebook to the central dashboard.

- Then drop the Re-train_model.ipynb next to the first one.

- Place the Save_model.ipynb at the end of the line.

- To concat all of them, click on the Outport of the first box and connect it with the Input Port of the second box. Repeat the steps with the second and last notebook. Figure 5 illustrates how the diagram should look:

- Configure the Collect_data node:

- In the first node, we are going to download the dataset from our S3 data bucket. To do this, we will have to specify the variables necessary for the connection with our storage. Right-click on the first pipeline node and select Open Properties, and a new tab will appear at the right side.

- Make sure you are in the NODE PROPERTIES section. There, scroll down to the Environment Variables area. If we fill all those fields, the s3 connection data will be stored in our pipeline code. For safety reasons, Remove all the environment variables that have been automatically populated.

- The S3 connection values will be provided instead using the secret that was generated when the

storageDataConnection was created. In the same NODE PROPERTIES tab, locate the Kubernetes Secrets section and press Add. Complete the form with the following values, clicking Add between each parameter.

#

Environment Variable

Secret Name

Secret Key 1

AWS_ACCESS_KEY_ID

storage

AWS_ACCESS_KEY_ID 2

AWS_SECRET_ACCESS_KEY

storage

AWS_SECRET_ACCESS_KEY 3

AWS_S3_ENDPOINT

storage

AWS_S3_ENDPOINT 4

AWS_DEFAULT_REGION

storage

AWS_DEFAULT_REGION 5

AWS_S3_BUCKET

storage

AWS_S3_BUCKET Also, we need to specify the output file that will be injected into the next node. Scroll down in the menu and locate the Output Files field. Click Add and type the following information:

#

Output Files

1

data/historical.csv

- Configure the Re-train_model node:

- In this case, to train the model we are going to use the dataset coming from the previous node, so we don’t need to specify the S3 connection.

However, we need to indicate the output file that will result from the training process. Right-click on the second pipeline node and select Open Properties. Locate the Output Files field and type the following value:

#

Output Files

1

models/edge/1/model.onnx

- Configure the Save_model node:

- This notebook uses the S3 data connection variables to upload the trained model. In order to provide the values for the different connection parameters, we need to add them as a secret. Right click on the Save_model.ipynb box and select Open Properties.

Again, delete all Environment Variables section and complete the Kubernetes Secrets section with the information in the following table:

#

Environment Variable

Secret Name

Secret Key 1

AWS_ACCESS_KEY_ID

storage

AWS_ACCESS_KEY_ID 2

AWS_SECRET_ACCESS_KEY

storage

AWS_SECRET_ACCESS_KEY 3

AWS_S3_ENDPOINT

storage

AWS_S3_ENDPOINT 4

AWS_DEFAULT_REGION

storage

AWS_DEFAULT_REGION 5

AWS_S3_BUCKET

storage

AWS_S3_BUCKET

- Rename the file as

Forecast.pipelineand click Save Pipeline in the toolbar (Figure 6).

- Now you can press the Play button to run the pipeline. Verify that the Runtime Configuration is set to Data Science Pipeline and click OK.

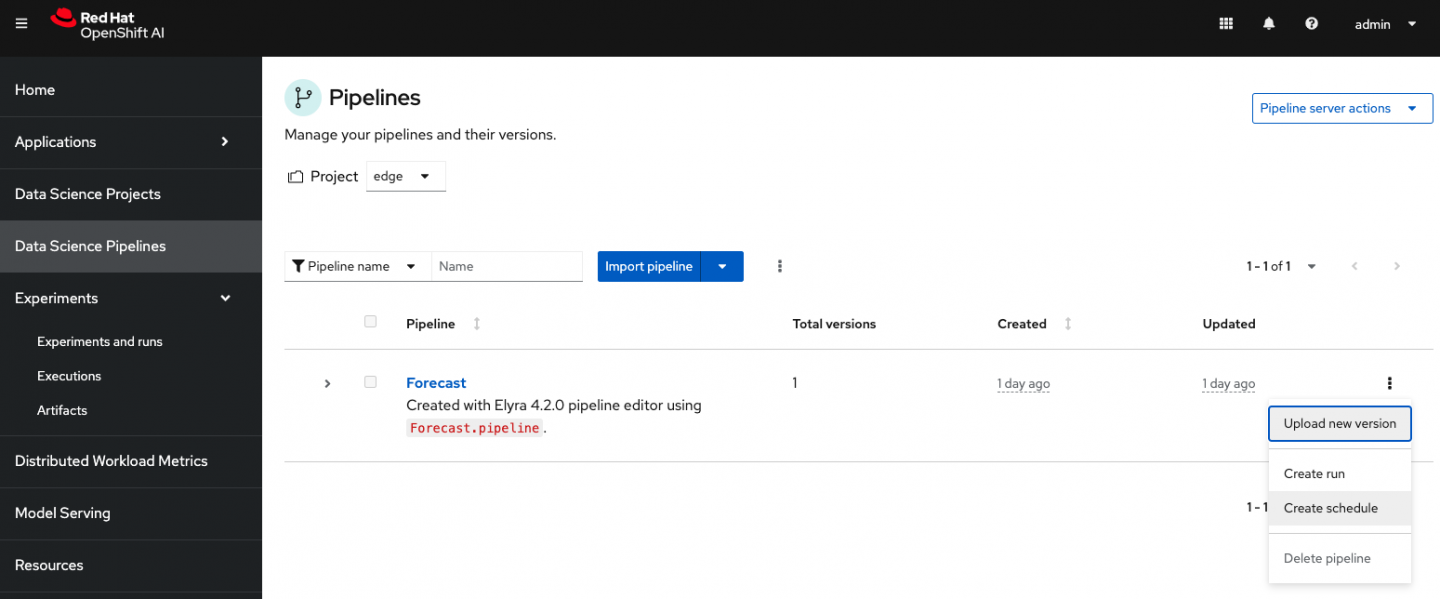

- Navigate back to your project dashboard and open the Pipelines tab. Figure 7 shows the new pipeline within RHOAI. Open the Forecast pipeline.

- A new page opens showing a diagram of the different pipeline steps. Expand the drop-down menu under the Actions button at the top-right, and select View runs. There you can select your pipeline run.

Here you can track the running status of the pipeline. When the pipeline execution finishes, all steps will be ticked green as presented in Figure 8.

Also, the model should have been uploaded to your s3 bucket. Navigate back to your MinIO dashboard and verify the model has been saved in the storage/models/edge/1/model.onnx path. Of course, you can also verify that the artifacts generated during the pipeline execution are stored in their corresponding pipelines bucket.

Schedule recurring executions

We’ve just created and executed our pipeline, and it has completed all the steps successfully. Our AI/ML lifecycle is now automated, but we manually triggered the execution. We can also automate this with a recurring task as follows:

- Navigate back to the Data Science Pipelines tab.

- Click on the three vertical dots on the right side of our Forecast pipeline and select Create schedule (refer to Figure 9).

- Complete the set up page with the following parameters:

- Experiment: Select the

forecastrun generated when executed the pipeline for the first time. - Name: Enter a meaningful name like

Daily run. - Trigger type: Select

Periodic. - Run every: In my case I want to execute this pipeline every day. Configure it for

1Day. - Pipeline: The

Forecastpipeline should be selected. - Pipeline version: The version should be

Forecasttoo.

- Experiment: Select the

- When filled, you should be ready to press Create schedule.

And just like that, our pipeline will run every day, collecting new data, training the model, updating and saving it to the bucket. It's all fully automated and running at the edge.

Wrap up

In this article, we explored how to use pipelines within Red Hat OpenShift AI to automate the full AI/ML lifecycle–from data extraction to model training and storage–on a single-node OpenShift instance. Automating workflows at the edge is crucial for reducing latency, ensuring security, and improving overall efficiency.